Memory requirements for import

As you learned earlier, the Data Importer can be used for small to medium datasets containing less than 1M rows. The Data Importer is a generalized app as you saw that creates all properties in the graph as strings, integers, decimals, datetimes, or boolean, and you need to possibly post-process or refactor the graph after the import. It is also designed to handle a smaller memory footprint, so it may not be useful for all of your data import needs.

When you import using Cypher statements, you have control over the amount of memory used for the import. In Cypher, by default, the execution of your code is a single transaction. In order to process large CSV imports, you may need to break up the execution of the Cypher into multiple transactions.

Using a subquery for the import

You can use this code structure to import a large dataset:

CALL {

// add data to the graph for each row

}If the CSV data is too large, you may need to modify as follows:

LOAD CSV WITH HEADERS

FROM 'https://xxx.com/xxx/large-file.csv'

AS row

CALL {

WITH row

// create data in the graph

} IN TRANSACTIONSPlanning for the import

One advantage of using Cypher for loading your CSV data is that you can perform the type transformations and some of the "refactoring" during the import. That is, you can customize how property types are managed so you need not do any post-processing after the load.

You must inspect and possibly clean the data before you import it. The large CSV data files you will be working with have already been cleaned.

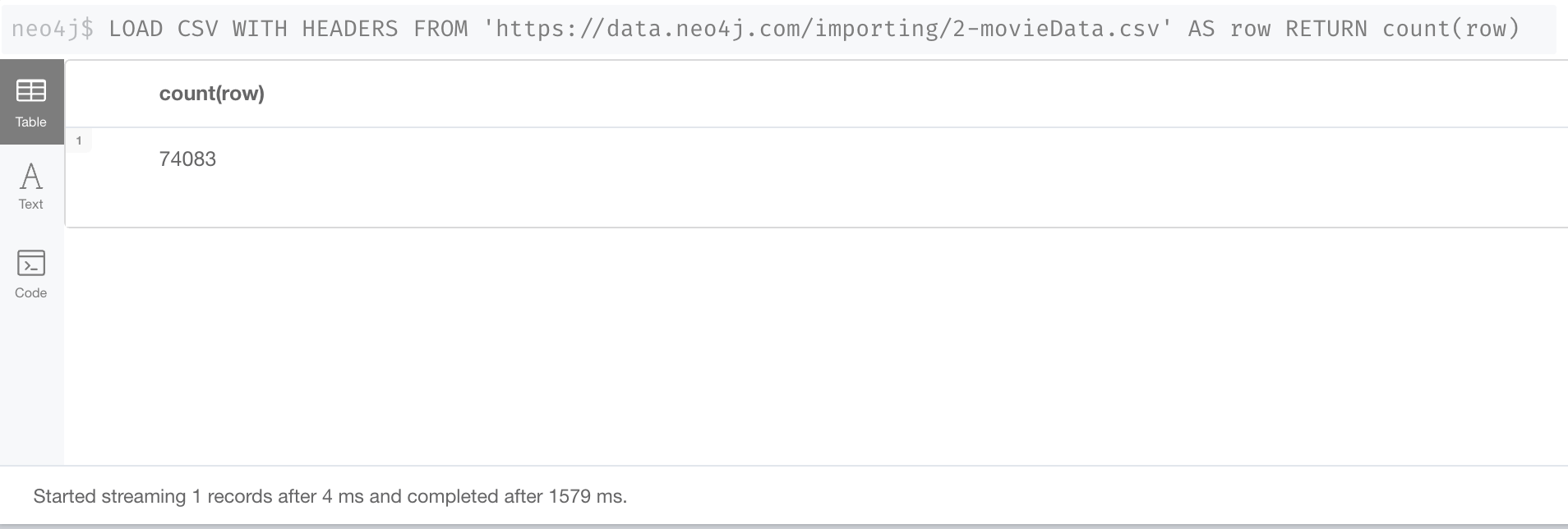

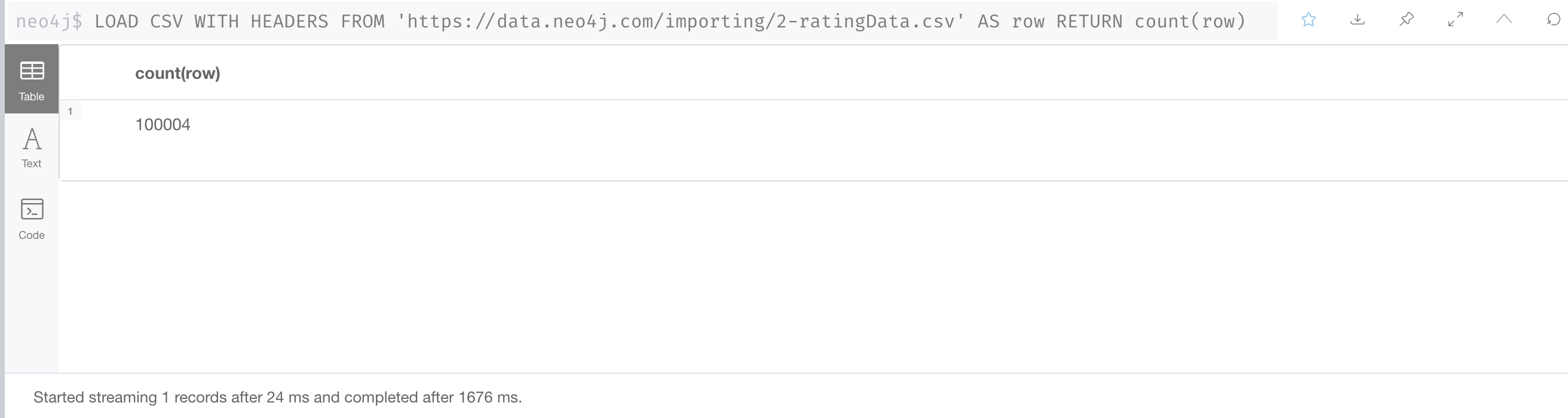

First we determine the number of rows in each file:

They do not exceed the 1M row limitation for the Data Importer, but you may run out of memory during the import so in this course we will use Cypher to load these CSV files.

Planning the Movie, Genre, Person import

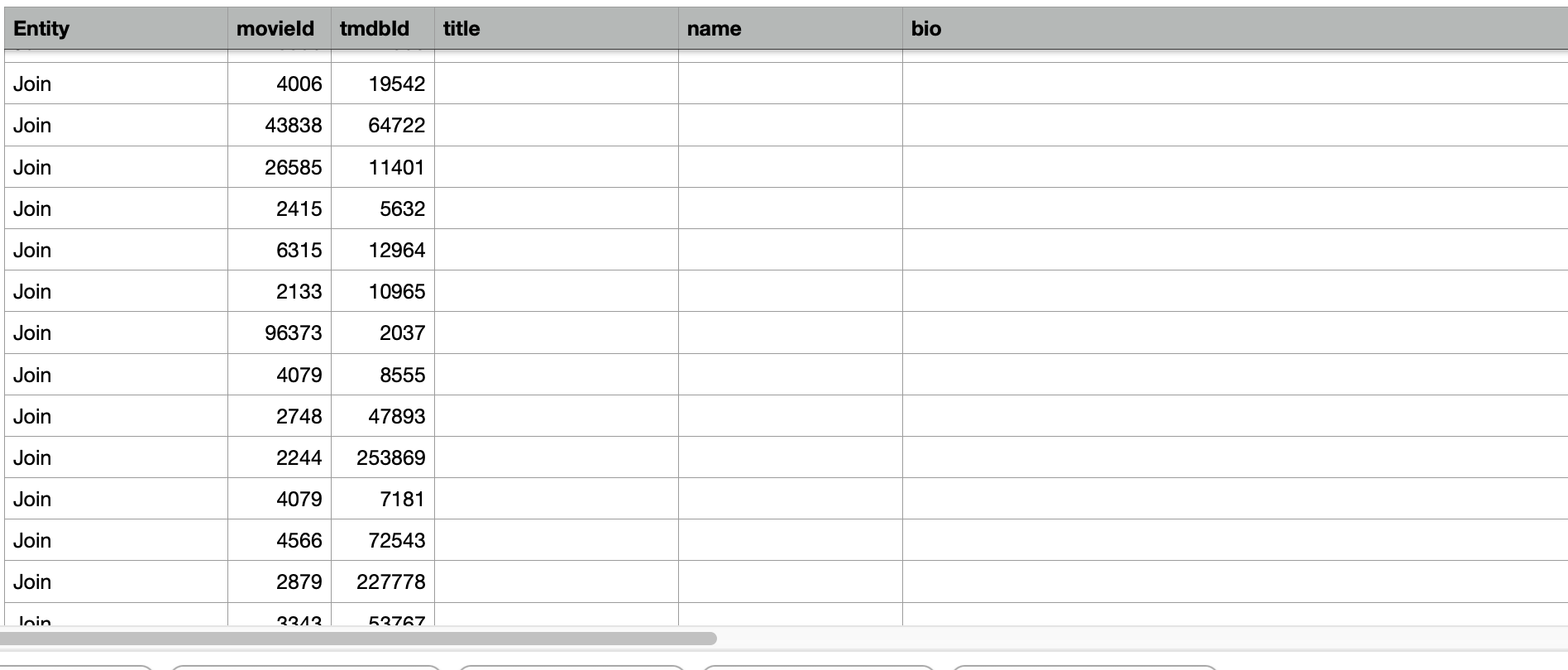

Next, we examine the fields in our first CSV file. Each row in the 2-movieData.csv file represents either an Entity or a relationship between two entities.

Entities

An Entity row has a value of Person or Movie, where the Movie rows have the genre data. We will continue to use these unique IDs for our Movie, Person, and Genre nodes:

-

Movie.movieId

-

Person.tmdbId

-

Genre.name

Here is what the Movie and Person entities look like in our CSV file:

The type of entity is either Person or Movie and each person has a value of tmdbId that uniquely identifies it. Each movie has a value of movieId that uniquely identifies it. Movies have values for things such as title and people have values for things such as name. How we process the data will depend upon the type of entity in the CSV file.

Relationships

A relationship row has an Entity value of Join.

Notice that for the join, we have both a movieId representing a movie and a tmdbId representing a person.

In addition, for each join row we have a Work column that describes whether the data will be used for creating the ACTED_IN or DIRECTED relationships in the graph. Actor rows will have a value for the role and a few director rows also have a value for role.

Multi-pass import processing

We recommend several passes to process this CSV file:

-

Create the Movie nodes and the Genre nodes.

-

Create the Person nodes.

-

Add the Actor labels and the ACTED_IN relationships.

-

Add the Director labels and the DIRECTED relationships.

The advantage of performing the import in multiple passes is that you can check the graph after each import to see if it is getting closer to the data model. If the CSV file were extremely large, you might want to consider a single pass.

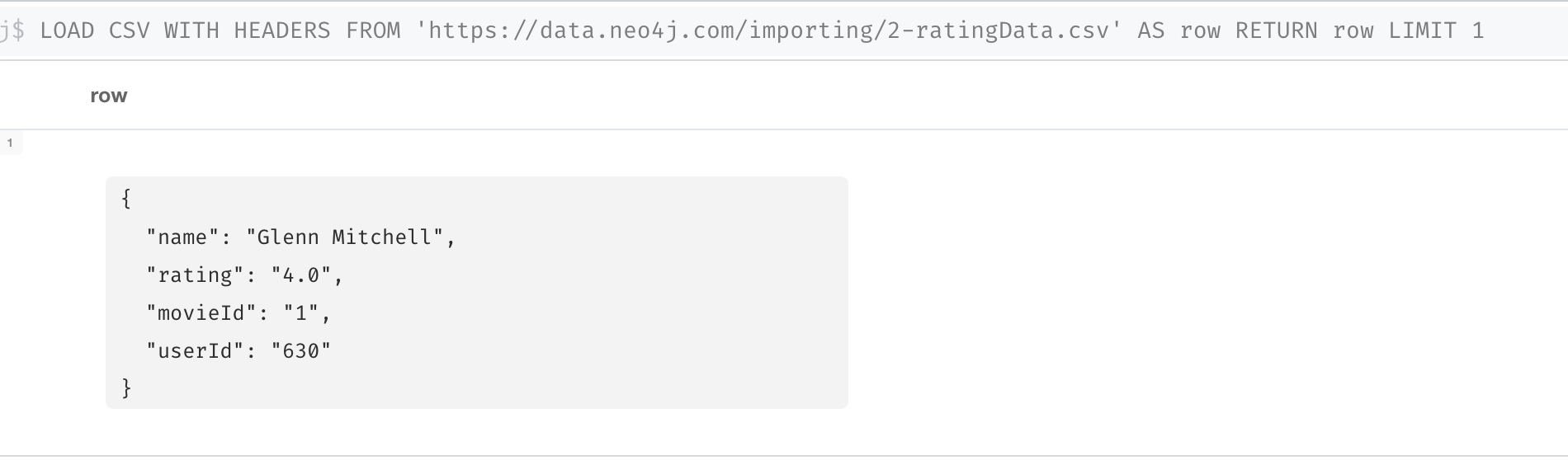

Planning the User import

Here are the fields in our second CSV file.

These fields again are what you worked with earlier. the userId is the unique ID for User nodes and movieId is the unique ID for Movie nodes. We know from our previous import using the Data Importer that these constraints already exist in the graph.

Check your understanding

Importing large CSV files

Why do you use CALL { …} when you import data?

-

❏ To automatically create the nodes using the headers in the CSV file.

-

❏ To lock the entire graph exclusively during the import.

-

❏ To temporarily raise the memory limit for the import.

-

✓ To reduce the amount of memory needed for the import.

Hint

This syntax is called a subquery in Cypher.

Solution

You use CALL {…} in Neo4j Browser to reduce memory needed for the import.

Summary

In this lesson, you learned some considerations for importing large CSV files and when you may want to use Cypher to import data, rather than the Data Importer. In the next Challenge, you will import CSV data with Cypher.